Abstract

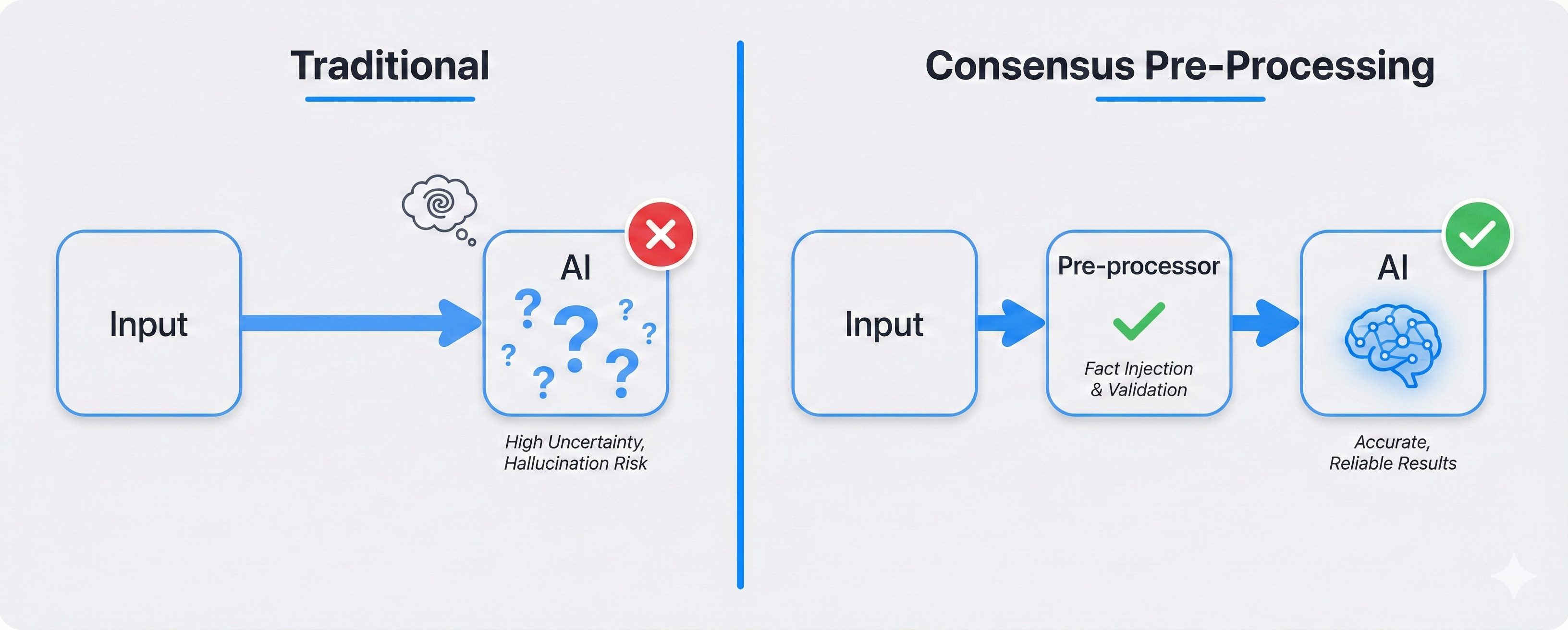

Large Language Models have revolutionized data processing, but their deployment often suffers from latency, cost, and hallucination risks. This paper presents a hybrid architecture that combines deterministic rule engines with data-driven consensus validation as a pre-processing layer before LLM analysis. By injecting verified consensus values directly into AI prompts, the system reduces hallucination on factual queries while reserving LLM capacity for contextual reasoning. The architecture enables fast rule-based validation, provides verified facts to the LLM, and offers graceful degradation when AI services are unavailable.

The Core Insight

Instead of asking AI: "Is 4,200 passing yards good for a QB?"

You tell AI: "Consensus data shows NFL QBs average 3,800 yards (p50), elite is 4,800 (p90). This QB has 4,200. Already validated as above average."

AI receives facts, not questions. It reasons about implications, not facts. It cannot hallucinate the league average because you provided the real number.

1. Introduction

The integration of Large Language Models into production systems presents a fundamental tension. LLMs excel at contextual reasoning, natural language understanding, and handling ambiguous inputs. However, they also introduce significant challenges: variable latency, unpredictable costs based on token usage, and the persistent risk of hallucination on factual queries.

This paper proposes a pre-processing architecture that handles deterministic checks and consensus-based validation before AI involvement, then provides the LLM with pre-validated data enriched with current consensus values.

2. Current Approaches and Their Limitations

LLM-First Architectures

- Latency: Every request incurs up to several seconds of LLM processing time

- Cost: Token usage for obvious checks is wasteful

- Hallucination: LLMs can confidently state incorrect facts

- Inconsistency: Same inputs can produce different outputs

Alternative AI Approaches

| Approach | How It Works | Problem |

|---|---|---|

| RAG | Retrieve docs, inject into context | AI still interprets raw data, can hallucinate meaning |

| Fine-tuning | Train model on domain data | Expensive, data gets stale, can't update easily |

| Guardrails | Let AI generate, validate after | Post-hoc correction wastes tokens |

These approaches try to make AI know more. Consensus pre-processing instead tells AI what it needs to know at inference time.

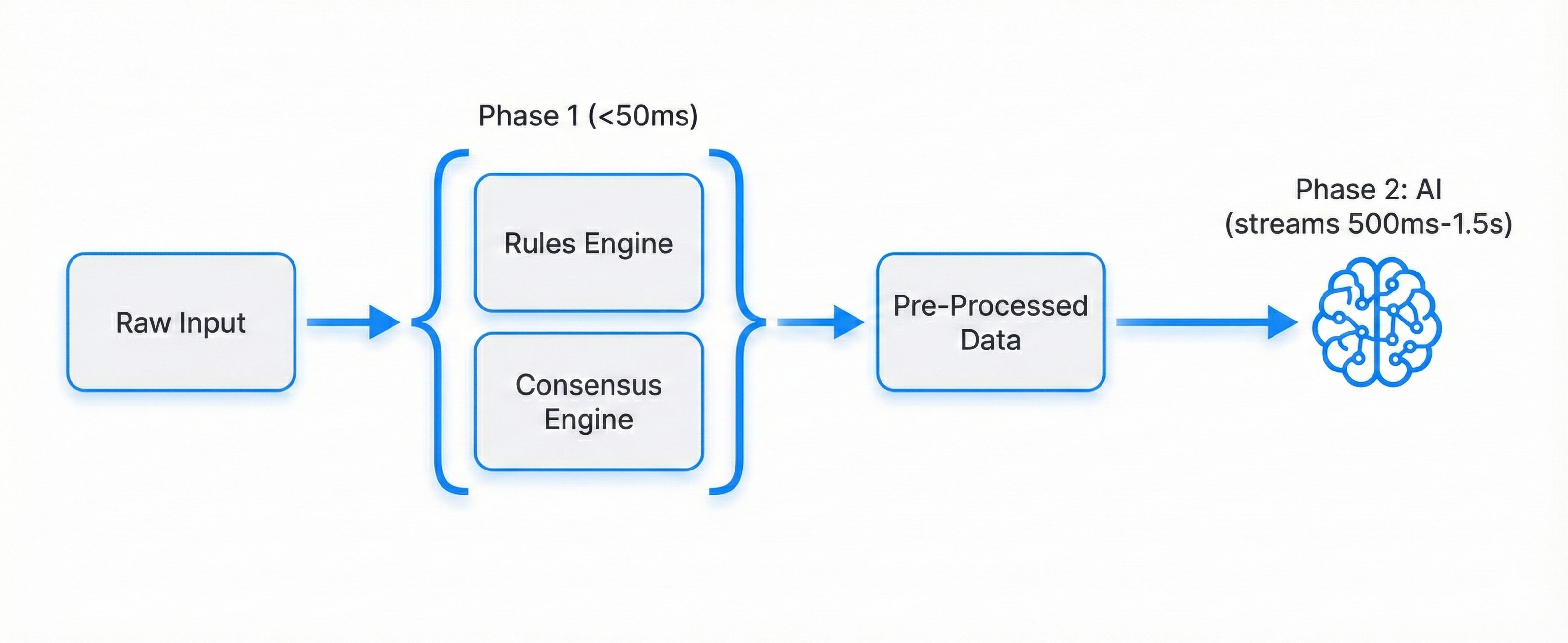

3. Proposed Architecture

Phase 1 (Pre-Processor) handles what can be determined without AI:

- Name/team validation (catch typos like "Partick Mahomes" or "Kansas City Cheifs")

- Format validation (stats in correct format, season year valid)

- Impossible values (negative yards, touchdowns exceeding pass attempts)

- Consensus comparison (is 4,200 passing yards elite, average, or below average for a QB?)

- Position-specific rules (a QB with 1,500 rushing yards triggers review)

Phase 2 (AI Analysis) handles what requires reasoning:

- Performance trend analysis (improving or declining over seasons?)

- Context interpretation (stats affected by injuries, new coaching staff?)

- Comparative analysis (how does this QB compare to others in similar systems?)

- Natural language scouting reports

The Consensus Engine

Consensus Data Sources

Consensus values can be populated through two approaches:

- Continuous external scraping: Automated pipelines pull current statistics from authoritative sources (e.g., NFL official stats, ESPN APIs) and update consensus ranges in real-time.

- Historical acceptance: Values previously validated and accepted as correct are stored in a database like AWS DynamoDB. A Redis cache layer provides fast lookups, falling back to DynamoDB on cache miss.

Both approaches can be combined—external scraping establishes baseline ranges while user-accepted values refine them for specific contexts.

4. Performance Characteristics

| Aspect | LLM-First | Pre-Processor + AI |

|---|---|---|

| Rule validation | Handled by LLM | Fast deterministic checks |

| Factual accuracy | LLM may hallucinate | Facts provided to LLM |

| Token efficiency | AI processes raw data | AI receives pre-validated data |

| Availability (AI down) | Complete failure | Phase 1 still works |

5. Key Advantages

Facts, Not Questions

AI receives "League average IS 3,800 yards" not "What's the average?"

Real-Time Updates

Change consensus data instantly. No model retraining required.

No Fact Hallucination

Rules and consensus handle knowable facts.

Graceful Degradation

Phase 1 works even if AI service is down.

Full Auditability

"Rule found X, Consensus found Y, AI inferred Z"

Cost Efficient

AI tokens only for complex reasoning.

6. Example Output

7. Conclusion

Consensus-based pre-processing represents a pragmatic middle ground between pure AI systems and rigid rule engines. By handling deterministic checks efficiently and providing verified facts to AI systems, this architecture achieves faster response times, lower costs, higher reliability, and better auditability.

The pattern is domain-agnostic and applicable wherever established benchmarks can inform validation.

Want to know more about consensus-based AI pre-processing? Contact me, I'm always happy to chat!