Abstract

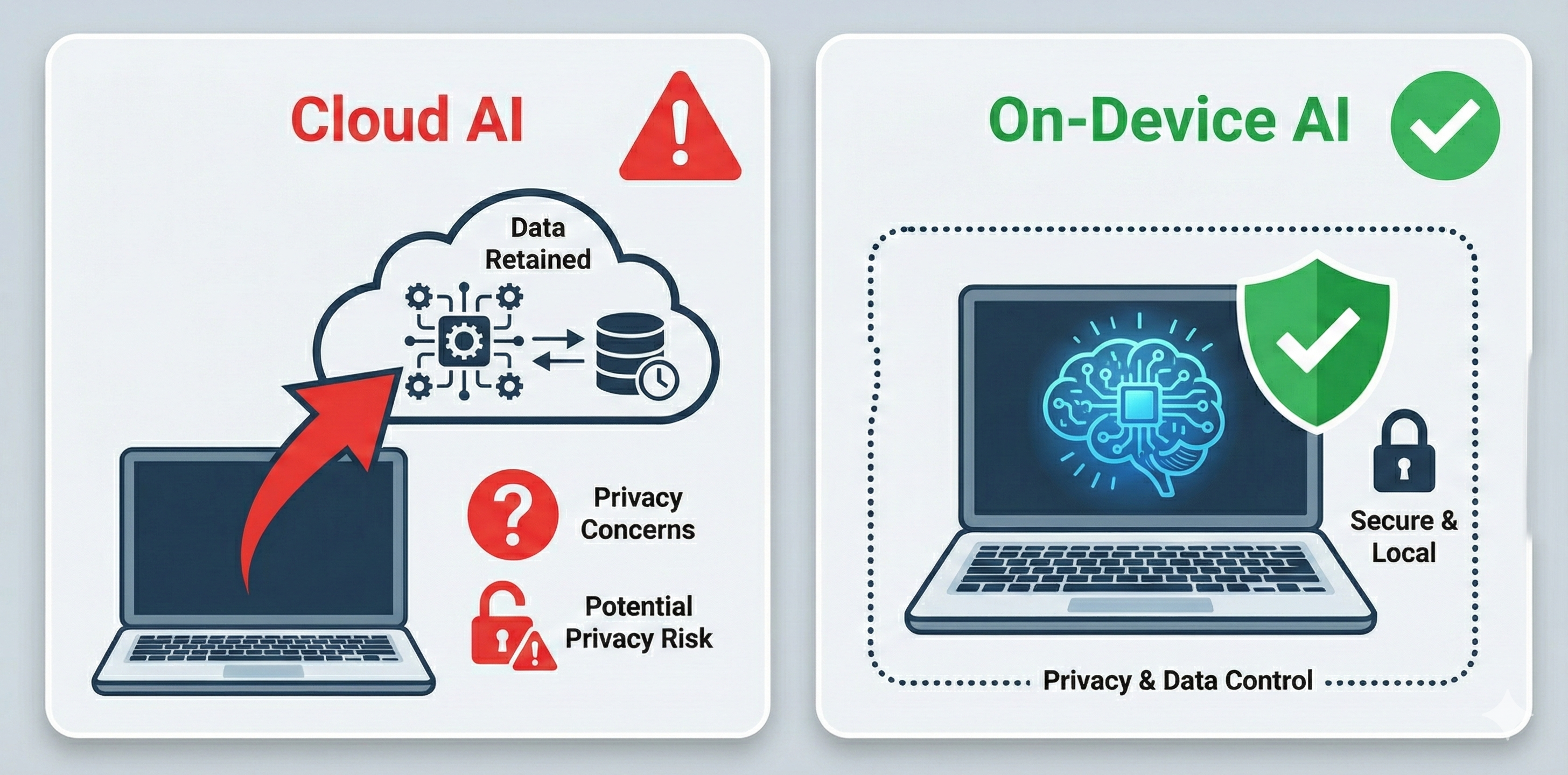

The current AI landscape assumes cloud processing: user data goes to API, inference runs on cloud GPUs, results return to user, provider stores/processes your data. This model has been accepted because "that's how AI works." But Apple Silicon and the MLX framework have changed the equation. For personal, sensitive applications, on-device LLM provides privacy by architecture rather than policy—your data physically cannot leave your device.

1. The Privacy Paradox

Every AI privacy policy says some version of:

"We don't use your data to train models... except for improving our services... and we may share with partners... and data is retained for..."

Privacy by Policy

- Trust a company's promise

- Policies can change

- Data breaches possible

- Requires faith

Privacy by Architecture

- Data cannot leave device

- Technically enforced

- No external exposure

- Verifiable

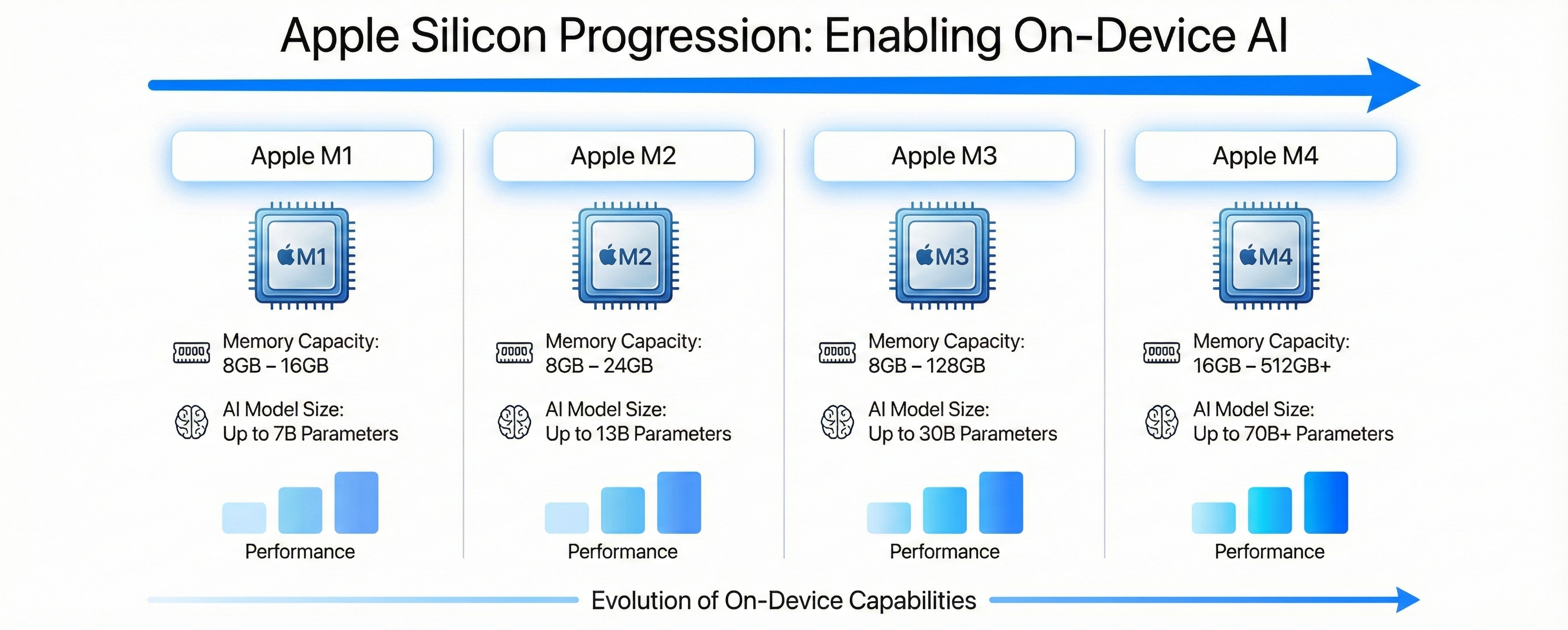

2. What Changed: Apple Silicon + MLX

For years, on-device LLM was impractical. Consumer hardware couldn't run meaningful models at usable speeds. Apple Silicon changed this:

| Chip | Unified Memory | Memory Bandwidth | LLM Performance |

|---|---|---|---|

| M3 | 8-128GB (Ultra) | 100-800 GB/s | 25-115 t/s depending on tier |

| M4 | 16-128GB (Max) | 120-546 GB/s | 30-45 t/s on 33-70B models |

| M5 | 16-192GB | 153+ GB/s | 19-27% faster than M4 |

Note: Memory bandwidth matters more than chip generation for LLM inference. An M3 Max (400 GB/s) outperforms an M4 Pro for token generation.

Apple's MLX Framework

Apple's MLX framework optimizes specifically for this hardware: native Metal GPU acceleration, unified memory eliminates CPU/GPU transfer, quantized models fit in available RAM, and performance rivals cloud inference for many tasks.

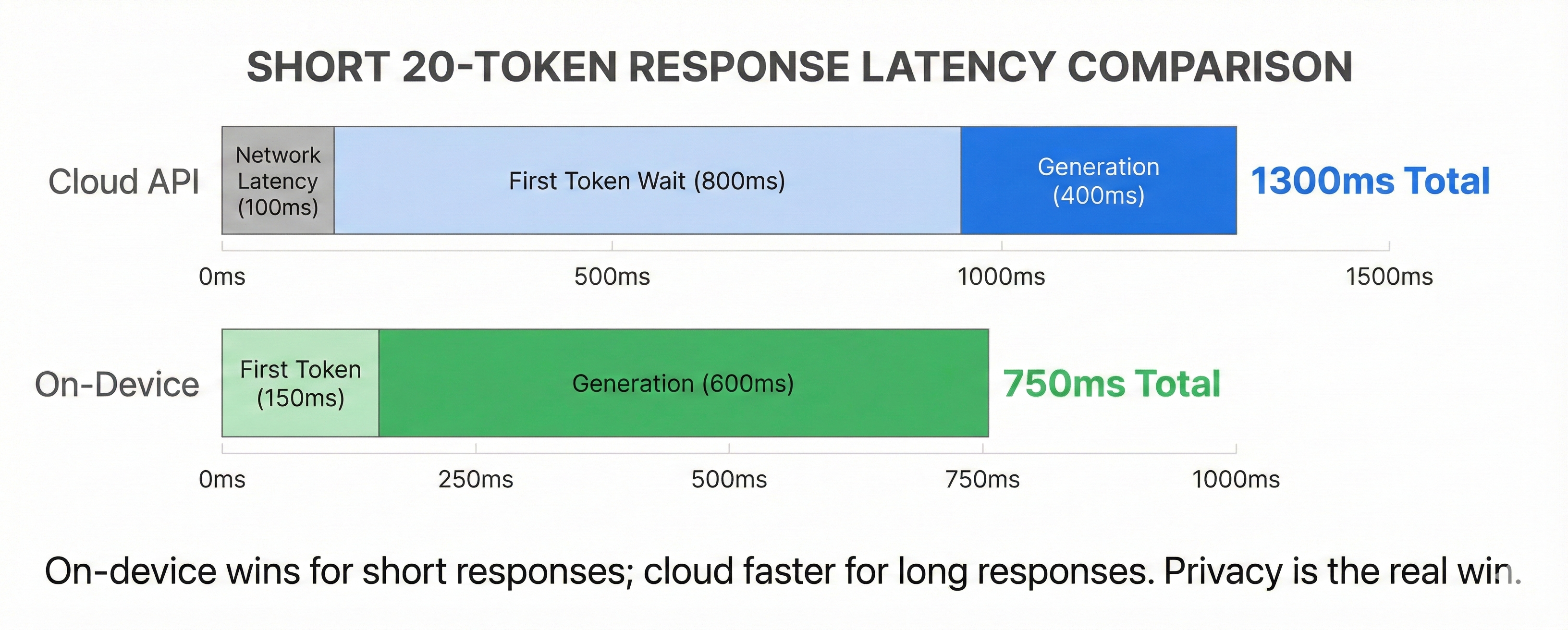

3. Performance Reality Check

| Metric | On-Device (M3 Pro, Llama 8B) | Cloud API (Claude/GPT-4o) |

|---|---|---|

| First token | 100-200ms | 200ms-2s (varies by load) |

| Tokens/second | 25-50 | 30-80 |

| 100-token response | 2-4 seconds | 1.5-3 seconds |

On-device is competitive for shorter responses. The lack of network round-trip helps, but cloud models are often faster at raw token generation. The win for on-device is privacy, not speed.

4. The Cost Equation

| Cost Type | Cloud API (GPT-4 Turbo) | On-Device |

|---|---|---|

| Per-query cost | ~$0.015 (500 tokens) | ~$0.0001 (electricity) |

| 100 queries/day | $45/month | ~$0.30/month |

| Hardware | N/A | Already owned (Mac) |

For users who already own compatible hardware, on-device running costs are dramatically lower than API fees. The comparison assumes you're not buying a Mac specifically for this purpose.

5. What On-Device Enables

True Privacy

Conversations never leave your Mac. No data retention policies to parse.

Offline Operation

Works on airplanes, in poor connectivity. Always available.

No Subscriptions

One-time hardware investment. No per-token fees or rate limits.

Data Sovereignty

You own your data completely. Export anytime. Delete means delete.

6. Use Cases That Demand On-Device

Personal Knowledge Management

- Notes, journals, private thoughts

- Health information

- Financial data

- Family information

Professional Confidentiality

- Legal documents

- Medical records

- Business strategy

- Competitive intelligence

Sensitive Personal Tasks

- Therapy/mental health journaling

- Relationship advice

- Career planning

- Personal struggles

Would you send your private journal to a cloud API? On-device removes the question.

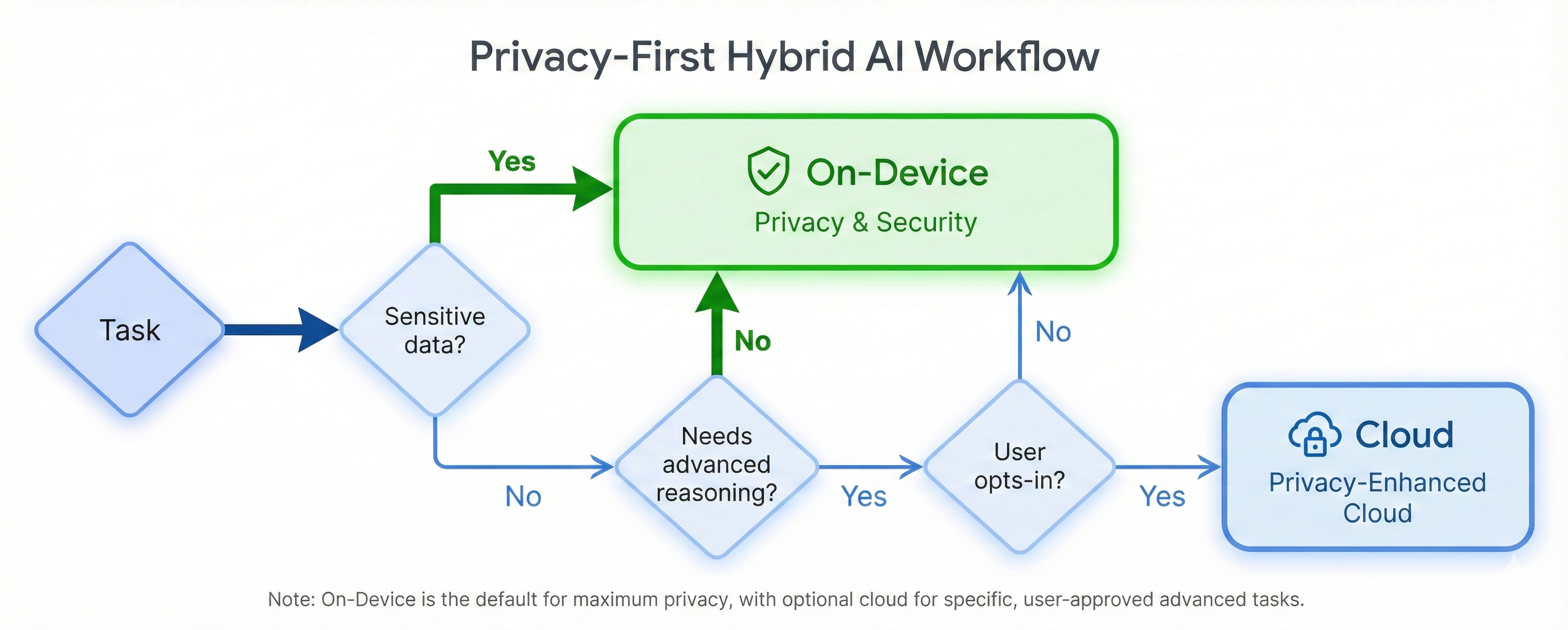

7. The Hybrid Approach

On-device doesn't mean cloud-never. A smart architecture uses both:

| Task | Processing | Reasoning |

|---|---|---|

| Private data analysis | On-device | Sensitive |

| Personal knowledge queries | On-device | Personal context |

| Complex reasoning | Cloud (opt-in) | User chooses |

| Public information | Cloud | No privacy concern |

User controls when data leaves device. Default is local.

8. Limitations and Trade-offs

Current Limitations

- Maximum model size bounded by RAM

- Not competitive with GPT-4/Claude for complex reasoning

- Less capable at specialized tasks

- Requires modern Apple hardware

What On-Device Does Well

- Summarization

- Entity extraction

- Simple Q&A

- Text classification

- Personal context understanding

9. Conclusion

On-device LLM isn't about avoiding cloud AI. It's about choosing when your data leaves your device.

For personal, sensitive, private use cases, the answer should be: never.

The technology now exists to make that practical.

References

- MLX — Apple's machine learning framework for Apple Silicon

- Apple Silicon GPU Architecture — Apple developer documentation

- Llama — Meta's open-source LLM models

Want to know more about on-device LLM? Contact me, I'm always happy to chat!