Abstract

Current AI systems treat all information as equally important forever. Your offhand mention of a restaurant three years ago has the same weight as your wedding anniversary. This paper presents a temporal memory architecture inspired by human cognition: facts have half-lives, decay naturally unless reinforced, and time-sensitive information maintains relevance until anchor points then fades rapidly. The result is AI that feels more human and handles contradictions gracefully.

1. The Problem with Perfect Recall

Current AI systems treat all information as equally important forever. Your offhand mention of a restaurant three years ago has the same weight as your wedding anniversary.

This isn't how human memory works. And it shouldn't be how AI memory works.

Human memory has:

- Decay — Unimportant details fade naturally

- Anchoring — Some facts stay vivid until an event passes, then fade fast

- Reinforcement — Repeated information strengthens

- Importance weighting — Some facts matter more than others

- Temporal context — When you learned something affects relevance

AI systems have: a vector database that stores everything forever.

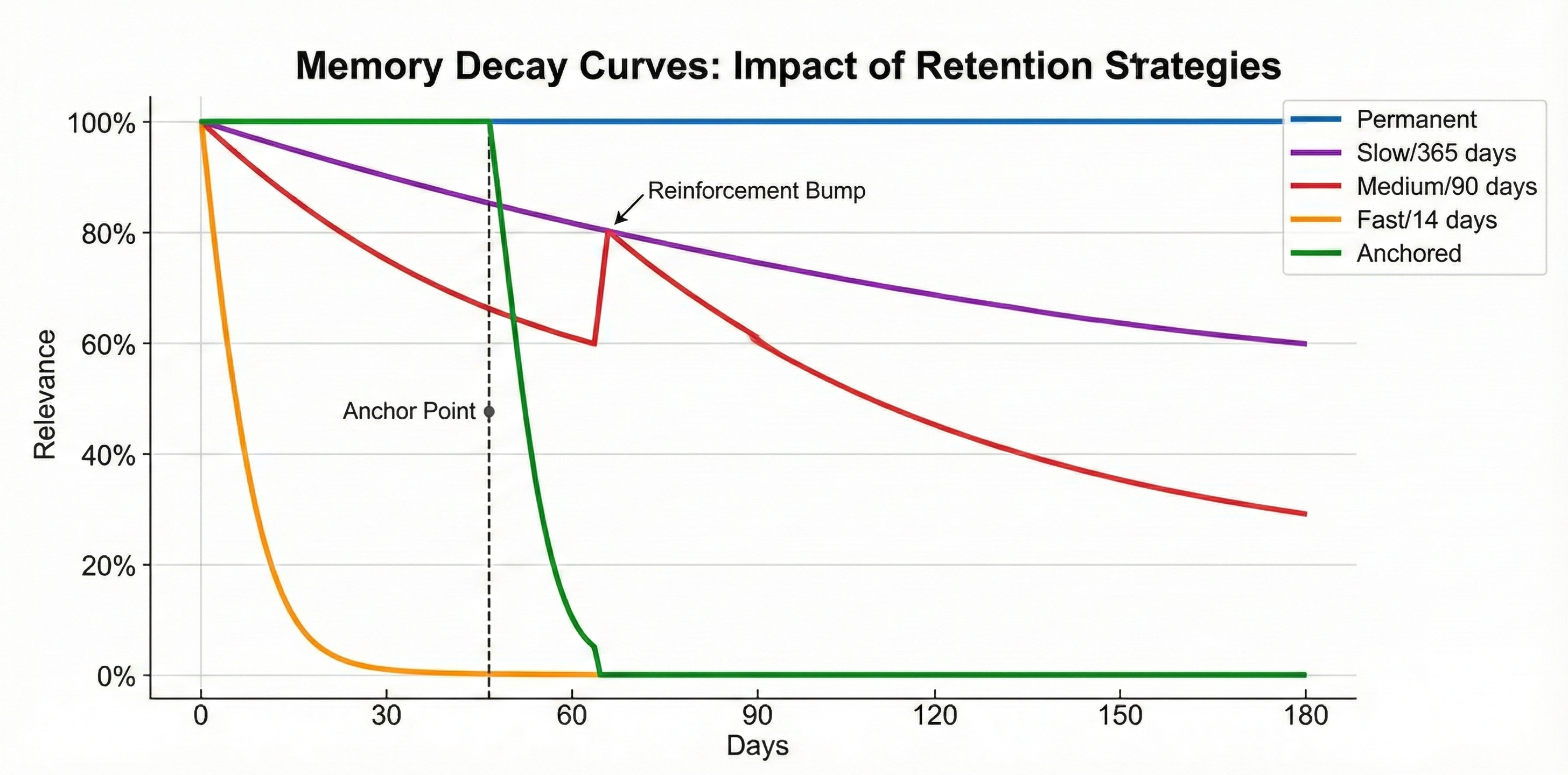

2. The Decay Model

Inspired by human memory research, facts should have a half-life:

| Decay Type | Half-Life | Use Case |

|---|---|---|

| Permanent | ∞ | Core identity, key relationships |

| Slow | 365 days | Important professional knowledge |

| Medium | 90 days | General information |

| Fast | 14 days | Recent context, current projects |

| Ephemeral | 3 days | Momentary details, casual mentions |

A fact starts at full relevance and decays over time unless reinforced.

3. Anchored Decay

Not all facts decay gradually. Some have an anchor point—a date or milestone where relevance changes dramatically.

| Fact | Anchor Point | Behavior |

|---|---|---|

| "Concert on December 1st" | Event date | High relevance until Dec 1, drops sharply after |

| "Broke my arm, cast on" | 6-week recovery | Critical while cast is on. Fades quickly once healed. |

| "Project deadline March 15" | Deadline | Increasingly relevant approaching date, irrelevant after |

| "Taking antibiotics" | 10-day course | High during treatment, drops when course ends |

The Key Insight

These facts don't decay from day one. They maintain relevance until the anchor, then cliff-drop. A broken arm is critical while the cast is on—it affects what you can do, what help you need, every plan you make. Six weeks later when the cast comes off, it decays with a 7-day half-life.

4. The Reinforcement Mechanism

Decay alone would forget everything. Reinforcement counterbalances:

| Mentions | Multiplier | Effect |

|---|---|---|

| 1 | 1.0 | Normal decay |

| 5 | 1.5 | 50% slower decay |

| 10 | 2.0 | Twice as persistent |

| 20+ | 3.0 | Effectively permanent |

Things you talk about repeatedly become core knowledge. Things mentioned once fade away.

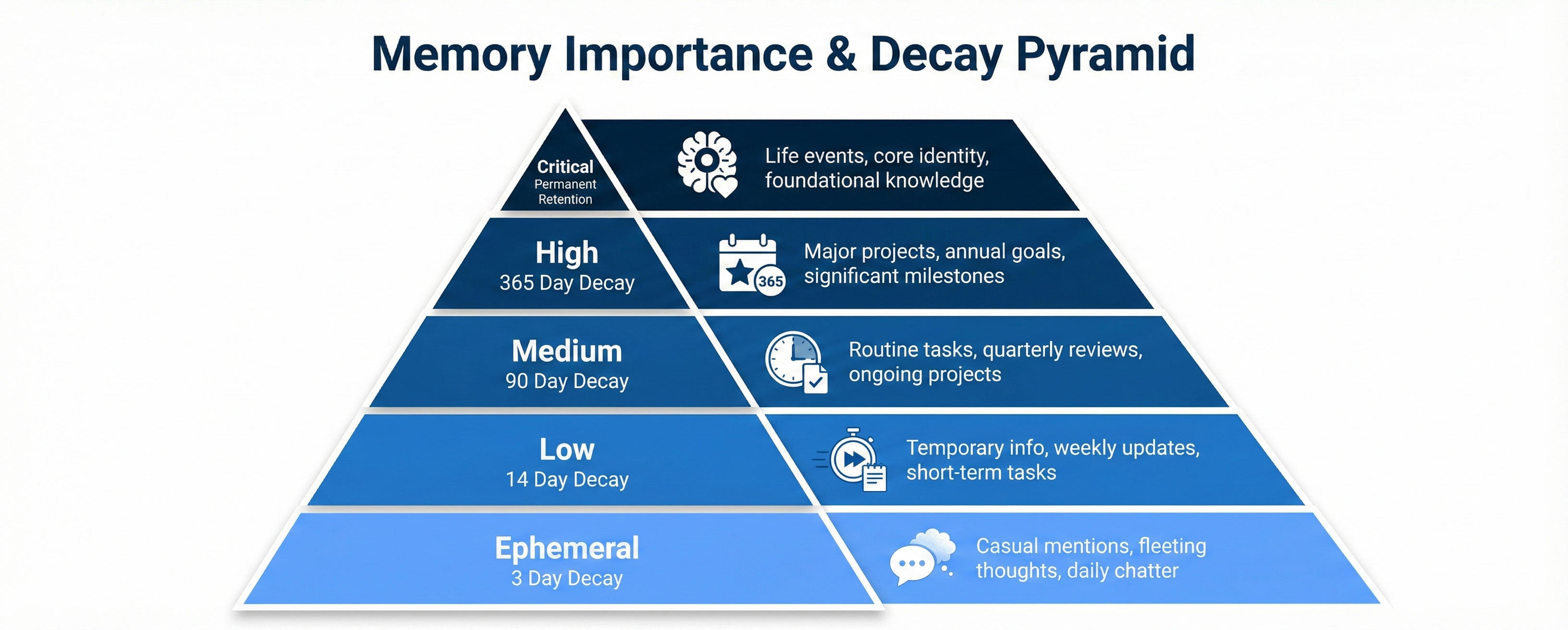

5. Importance Classification

Not all facts are created equal. At capture time, classify importance:

| Level | Description | Initial Half-Life |

|---|---|---|

| Critical | Life events, core relationships | Permanent |

| High | Career, health, major decisions | Slow (365 days) |

| Medium | General knowledge, preferences | Medium (90 days) |

| Low | Context, casual mentions | Fast (14 days) |

| Trivial | Background noise | Ephemeral (3 days) |

The AI learns that "I got married to Sarah" is Critical, while "I had coffee this morning" is Trivial.

6. Why This Matters

Without Decay

AI confidently recommends sushi restaurants.

With Decay

AI understands your current preferences.

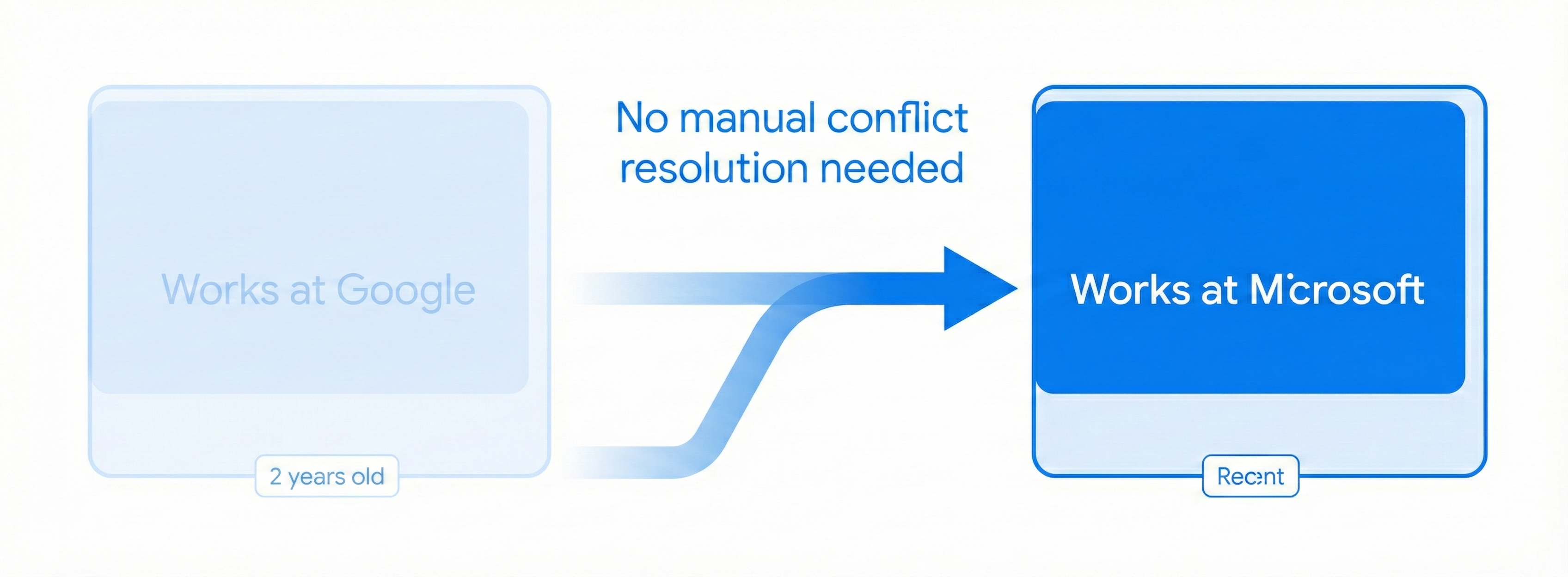

7. Conflict Resolution

Temporal memory elegantly handles contradictions:

Old fact: "John works at Google" (2 years old, decayed to 15%)

New fact: "John works at Microsoft" (just captured, 100%)

Without decay, you have a contradiction to resolve. With decay, the old fact naturally gives way to the new one. No explicit conflict resolution needed.

8. Privacy by Forgetting

Temporal memory is also a privacy feature:

Natural Data Lifecycle

Sensitive information naturally decays over time

Casual Disclosures Protected

One-time mentions don't persist forever

No Manual Deletion

Users don't need to actively manage old data

Matches Expectations

System mirrors human assumptions about memory

"I told you that once, years ago" shouldn't be perfect recall.

9. The Philosophical Argument

Perfect memory isn't a feature—it's a bug.

Human memory evolved to forget because:

- Storage is finite

- Relevance changes over time

- Old information can mislead

- Forgetting enables growth

AI assistants that remember everything create an uncanny, uncomfortable experience. They know things you've forgotten you ever said.

Temporal memory creates AI that feels more human.

10. Conclusion

Temporal memory represents a fundamental shift in how AI systems handle knowledge over time. Instead of treating memory as a static database, it becomes a living system that naturally prioritizes recent and important information while letting the irrelevant fade away.

The result is AI that better understands context, handles contradictions gracefully, and respects the natural lifecycle of information.

References

- Ebbinghaus Forgetting Curve — The psychological research that inspired this architecture

- Spaced Repetition — Memory research on retention and recall patterns

Want to know more about temporal memory in AI? Contact me, I'm always happy to chat!