Abstract

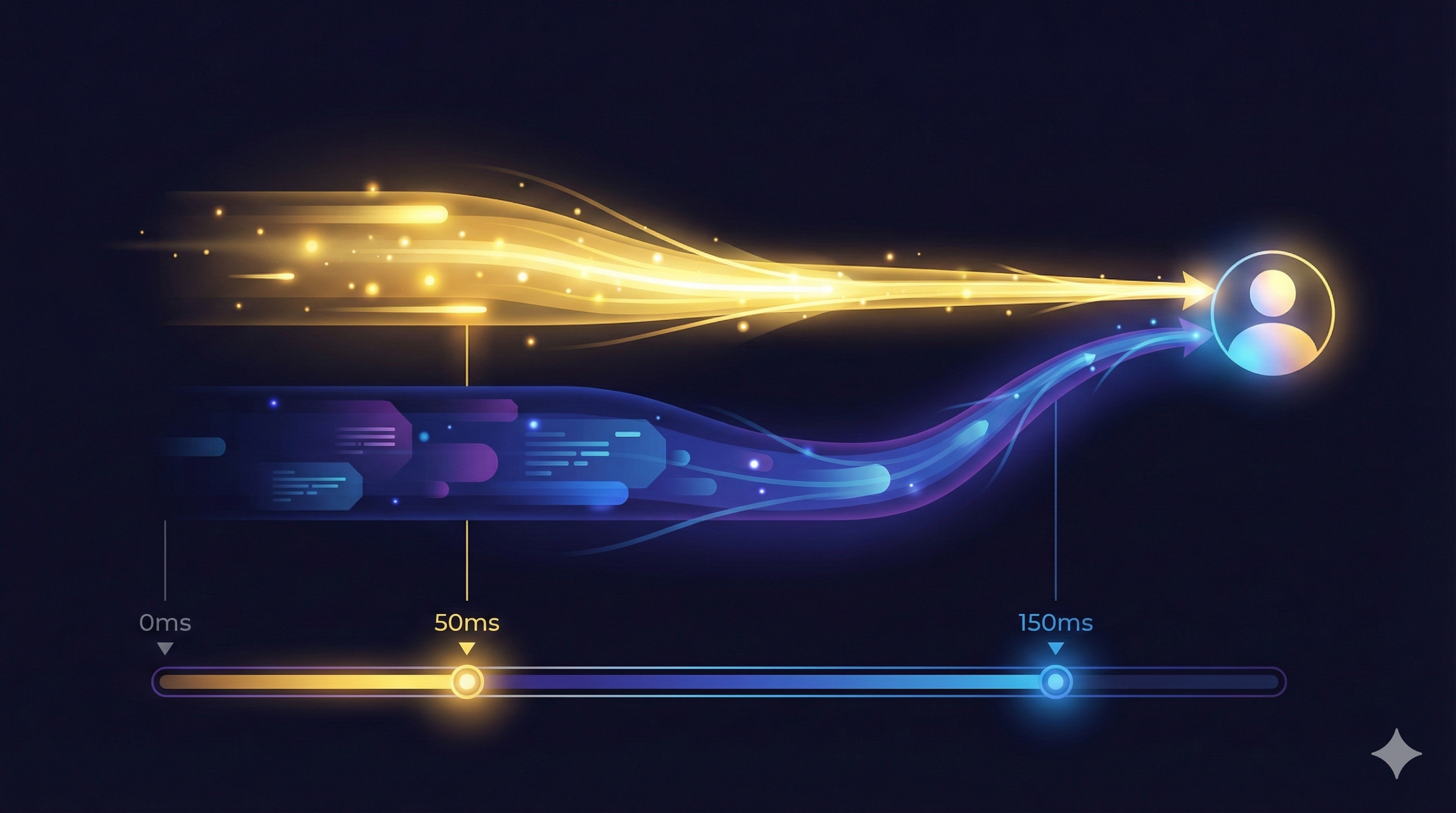

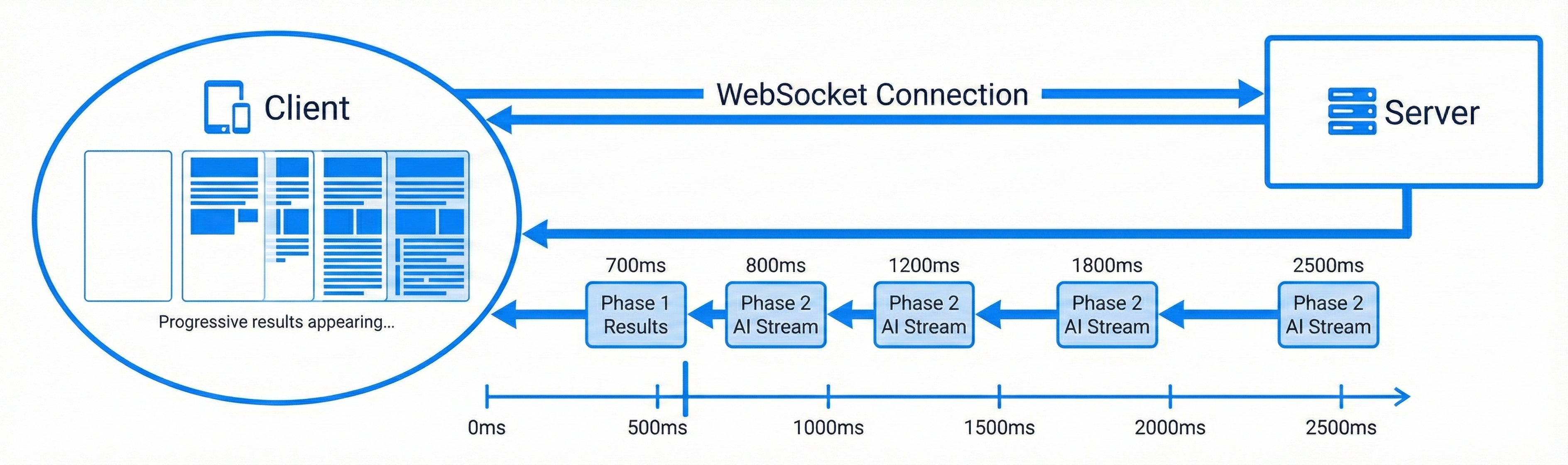

Full AI roundtrips typically take 2.5-7 seconds depending on model size. By the time results arrive, users have already formed a negative impression. Two-phase streaming solves this by delivering rule-based results at ~700ms (achievable with FastAPI and WebSocket) while AI analysis continues in the background. Users see value in under a second instead of waiting for the full LLM response.

1. The Perception Problem

Users don't experience latency in milliseconds. They experience it in feelings:

| Actual Latency | User Perception |

|---|---|

| <100ms | Instant |

| 100-300ms | Fast |

| 300-1000ms | Noticeable delay |

| >1000ms | Slow, frustrating |

Full AI roundtrips typically take 2.5-7 seconds depending on model size (~2.5s for small/fast LLMs, 5-7s for larger models like GPT-4 or Claude). By the time results arrive, users have already formed a negative impression—even if the results are excellent.

2. The Two-Phase Alternative

Phase 1 (~700ms): Rules, validation, consensus checks—anything deterministic. With FastAPI and WebSocket, this is achievable including network roundtrip.

Phase 2 (2.5-7s total): AI analysis, pattern detection, natural language explanations. Small LLMs complete around 2.5s; larger models like GPT-4 or Claude can take 5-7s.

The user sees results at 700ms instead of waiting several seconds. That's the difference between "fast" and "slow" in user perception.

3. The Psychology of Progressive Disclosure

Research on perceived performance shows:

First Paint Matters Most

Users judge speed by when they see anything

Progressive Loading Feels Faster

Even if total time is identical

Uncertainty is Worse Than Waiting

A spinner with no progress is anxiety-inducing

Partial Results Reduce Abandonment

Users stay engaged when feedback arrives

The Core Insight

Two-phase streaming isn't about making AI faster. It's about making users feel like the system is faster by delivering value immediately and enhancing it progressively.

4. Implementation Architecture

Phase 1: Deterministic Processing

Phase 2: AI Analysis

5. The WebSocket Advantage

HTTP request/response forces you to wait for everything. WebSocket streaming lets you send partial results:

Even within Phase 2, AI responses can stream token-by-token if using a streaming LLM API.

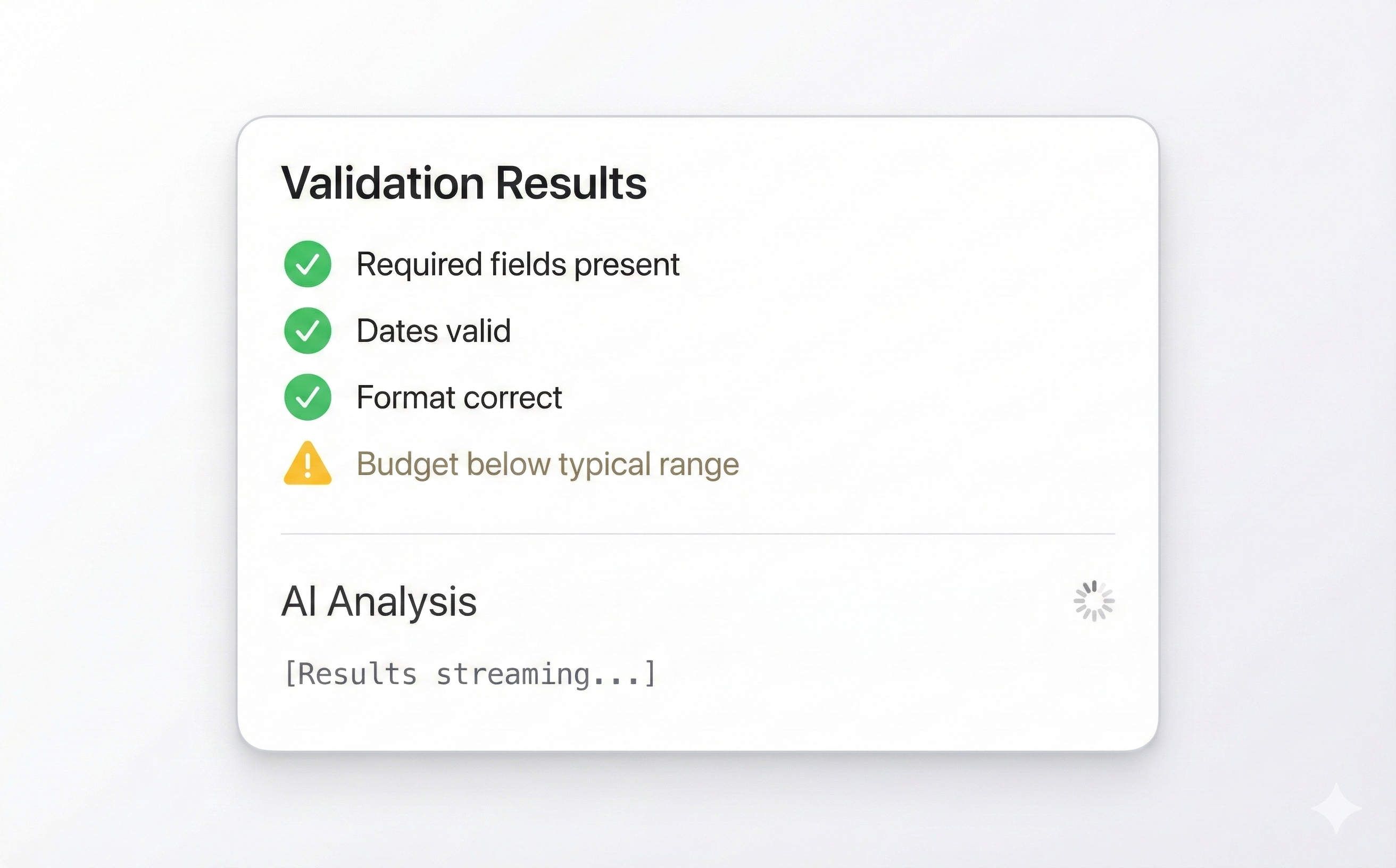

6. UX Design for Two-Phase Results

Visual hierarchy matters. Here's how to display results:

AI Analysis

[Results streaming...]

Users see validation results immediately. The AI section has a subtle loading indicator. When AI results arrive, they animate in without disrupting what's already visible.

7. Handling Phase 2 Failures

AI services can be slow or unavailable. Two-phase architecture handles this gracefully:

| Scenario | Phase 1 | Phase 2 | User Experience |

|---|---|---|---|

| Normal | ✓ ~700ms | ✓ ~2.5s total | Full results |

| AI slow | ✓ ~700ms | ⏳ 2-4s | Phase 1 instant, AI delayed |

| AI down | ✓ ~700ms | ✗ timeout | Phase 1 results only |

The system never blocks on AI. Users always get Phase 1 results, with AI as enhancement.

8. When to Use Two-Phase Streaming

Good Candidates

- Form validation with AI suggestions

- Search with AI-enhanced results

- Content analysis with both rules and intelligence

- Any workflow where instant feedback + deeper analysis both matter

Not Necessary

- Pure AI tasks (chat, generation) where there's no fast path

- Batch processing where latency doesn't matter

- Simple CRUD operations with no AI component

9. Measuring Success

| Metric | What It Tells You |

|---|---|

| Time to first result | Phase 1 performance |

| Time to complete | Total latency |

| Phase 2 success rate | AI reliability |

| User engagement after Phase 1 | Are partial results useful? |

| Abandonment rate | Comparison to single-phase |

10. Conclusion

Two-phase streaming isn't about making AI faster. It's about making users feel like the system is faster by delivering value immediately and enhancing it progressively.

When full AI roundtrips take 2.5-7 seconds (depending on model size), delivering Phase 1 results at ~700ms gives users immediate value while they wait for deeper analysis.

References

- Response Times: The 3 Important Limits — Nielsen Norman Group research on perceived performance

- Progress Indicators Make a Slow System Less Insufferable — Research on progressive disclosure

Want to know more about two-phase streaming? Contact me, I'm always happy to chat!